Software developers and ML engineers want to run their applications as fast as possible, but they also care a lot about the ease of programming and deployment. This is why they prefer Python, Java or C++ instead of C or other lower level programming languages.

In other words, not only do they look for a cost-efficient way of training and deploying models, but also for a simpler path to interact with their hardware and that’s why CPUs are broadly used.

Abstraction layers on computing systems allow the seamless deployment and easy of programming. The same applies for the utilization of hardware resources where abstraction layers allow better resource utilization and lower cost of deployment. We watched this happen with the server virtualization hypervisors, and now it happens with Kubernetes container controllers too.

Hardware accelerators, like GPUs and FPGAs, offer high computing power and memory bandwidth. However, they are among the most expensive devices in a datacenter, thus being very important to make sure that all of the resources in a system are being shared and as fully utilized as possible.

It would have all been a lot easier if the Kubernetes container controller could just inherit the underlying abstractions of the server virtualization hypervisors and other scale-out extensions that are often added to virtualized servers. But it doesn’t work that way.

Kubernetes was not built in order to run high high-performance workloads on FPGAs — it was built to to run services on classic CPUs. Therefore, there are many things that are missing in Kubernetes in order to efficiently run applications using containers.

FPGA abstraction layer

The first item that was missing was the abstraction layer on the FPGAs. Currently FPGA developers had to integrate to the their host code information about the kernel file (the program that is running on the FPGAs) and take care about the memory management and the configuration of the FPGAs. They also had to allocate specific FPGAs for their application (imagine if software programmers had to explicitly specify in which core their application would run in a 8-core CPU).

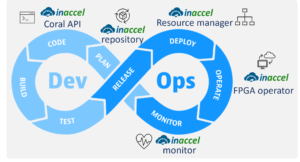

This is why InAccel developed the Coral resource manager that abstracts away the FPGA resources and decouples the SW developers from the FPGA developers. The SW developer just invoke the function that they want to accelerate and the Coral manager takes care the resource allocation, the configuration of the devices and the workload distribution. Essentially, InAccel Coral functions as an abstraction layer for the workloads: it abstracts and streamlines access to the underlying hardware of FPGAs.

FPGA Operator

Another technology InAccel has developed is a Kubernetes FPGA operator. InAccel FPGA Operator is a cloud-native method to standardize and automate the deployment of all the necessary components for provisioning FPGA-enabled Kubernetes systems. FPGA Operator delivers a universal accelerator orchestration and monitoring layer, to automate scalability and lifecycle management of containerized FPGA applications on any Kubernetes cluster.

The FPGA operator allows cluster admins to manage their remote FPGA-powered servers the same way they manage CPU-based systems, but also regular users to target particular FPGA types and explicitly consume FPGA resources in their workloads. This makes it easy to bring up a fleet of remote systems and run accelerated applications without additional technical expertise on the ground.

In order to refrain from making vendor-specific choices, InAccel FPGA Operator architecture has remained widely compatible. InAccel has partnered with all major Kubernetes vendors (SUSE, Canonical and Red Hat), and users can use the FPGA operator regardless of what Kubernetes platform they are using.

In addition, InAccel software stack now integrates with a number of machine learning frameworks, MLOps tools, and public cloud offerings. These include Keras, TensorFlow, PyTorch, JupyterHub, as well as Xilinx Vitis AI framework.

Even frameworks that are not pre-integrated can be integrated relatively easily, as long as they run in containers on top of Kubernetes. As far as cloud platforms go, InAccel FPGA operator works with all 2 major cloud providers that offer FPGAs (AWS & Microsoft Azure), as well as on-premise.