Evaluating the best hardware platform for your deep learning application can sometimes be tedious and time consuming. Also, the marketing numbers provided by several companies sometimes can be misleading as they refer to specialized benchmarks. MLPerf's mission is to build fair and useful benchmarks for measuring training and inference performance of ML hardware, software, and services. A widely accepted benchmark suite benefits the entire community, including researchers, developers, hardware manufacturers, builders of machine learning frameworks, cloud service providers, application providers, and end users. MLPerf provides a standard based benchmark that allows the performance comparison of several hardware platforms.

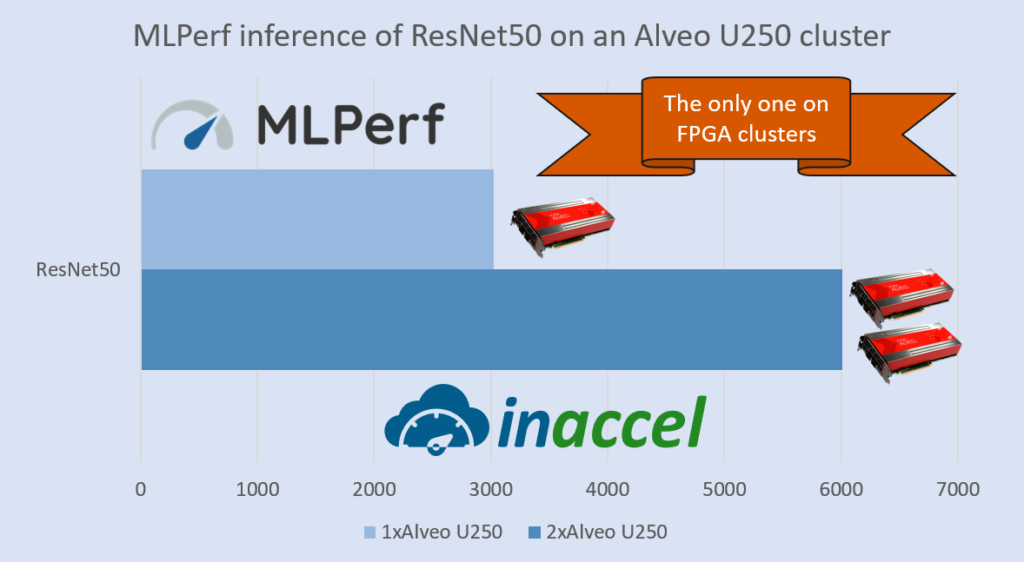

InAccel announces today the results for the MLPerf deep learning inference task using a cluster of Xilinx Alveo FPGA U250 boards. InAccel with its unique FPGA accelerator orchestrator technology is the only company to release results on data-center inference using a cluster of FPGA boards and not just a single FPGA instance. Using the Quantized ResNet50 Dataflow Accelerator from Xilinx Research Labs, InAccel provides an auto-scalable Keras-like library that uses InAccel Coral orchestration layer to automatically distribute the inference workloads to the available FPGAs.

InAccel Coral allows ease of deployment, auto-scaling capabilities, effortless resource management and task scheduling for FPGA resources, making easier than ever the deployment and the utilization of FPGAs in any kind of application.

Our accelerator orchestrator allows simpler integration with high-level programming languages and frameworks as well as seamless sharing from multiple users/processes and fault-tolerant hardware operations. Using InAccel Keras backend the ResNet50 accelerated model can be easily scaled-out to e.g. 16 FPGA boards per server node. MLPerf inference results show that InAccel Keras can achieve up to 6008 FPS on a cluster of 2 Xilinx Alveo U250 boards (Offline scenario). It also allows reduced latency in applications with low-latency constraints. In the case of a SingleStream scenario the latency drops to as low as 7 ms making it ideal for applications where latency is of paramount importance.

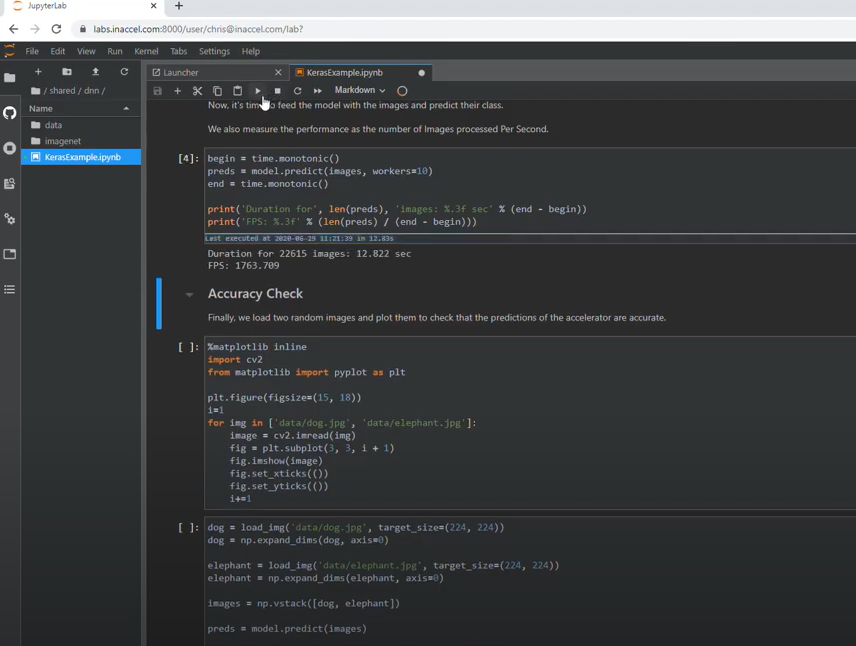

Users may evaluate instantly and for free these results using InAccel Studio, our unique fully managed service that provides every developer and data scientist free access to both FPGA resources and ready-to-use accelerated libraries. Using a familiar Jupyter-based environment, users are able to run new ResNet50 inference benchmarks against their own datasets. Users interested to have exclusive access to FPGA accelerated inference may contact InAccel team and get guidance on how they can use InAccel Keras atop any cluster of FPGAs.

Feel free to check the official MLPerf inference results here.

Note: The managed InAccel FPGA Studio service is available only for demonstration purposes. Since it is completely free, multiple users may access the FPGA resources concurrently, which may affect the performance of the applications. If you are interested to deploy InAccel Studio on your own data-center with multiple FPGA boards or to run your applications in the cloud exclusively, contact us at info@inaccel.com.