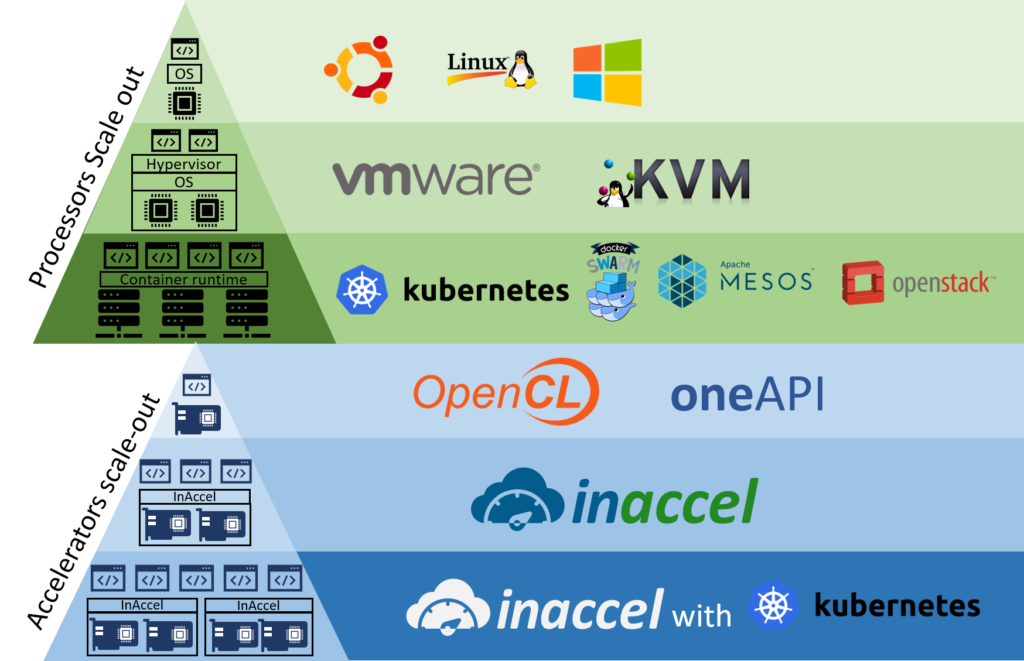

FPGAs can offer significant speedups in many applications, but they lack frameworks for easy deployment and scaling. In the CPU-world, software community has moved from a single node deployment era to virtualized deployment era and most recently to multi-tenant, multi-server deployment using Kubernetes. In this article we discuss how the corresponding framework in the FPGA-world can make much easier the deployment, scaling and resource management of FPGA clusters in distributed systems.

Kubernetes describes how we have moved from traditional deployment era to container deployment era.

Traditional deployment era: Early on, organizations ran applications on physical servers. There was no way to define resource boundaries for applications in a physical server, and this caused resource allocation issues. For example, if multiple applications run on a physical server, there can be instances where one application would take up most of the resources, and as a result, the other applications would underperform. A solution for this would be to run each application on a different physical server. But this did not scale as resources were underutilized, and it was expensive for organizations to maintain many physical servers.

Virtualized deployment era: As a solution, virtualization was introduced. It allows you to run multiple Virtual Machines (VMs) on a single physical server’s CPU. Virtualization allows applications to be isolated between VMs and provides a level of security as the information of one application cannot be freely accessed by another application.

Virtualization allows better utilization of resources in a physical server and allows better scalability because an application can be added or updated easily, reduces hardware costs, and much more. With virtualization you can present a set of physical resources as a cluster of disposable virtual machines.

Each VM is a full machine running all the components, including its own operating system, on top of the virtualized hardware.

Container deployment era: Containers are similar to VMs, but they have relaxed isolation properties to share the Operating System (OS) among the applications. Therefore, containers are considered lightweight. Similar to a VM, a container has its own filesystem, CPU, memory, process space, and more. As they are decoupled from the underlying infrastructure, they are portable across clouds and OS distributions.

Containers have become popular because they provide extra benefits, such as:

- Agile application creation and deployment: increased ease and efficiency of container image creation compared to VM image use.

- Dev and Ops separation of concerns: create application container images at build/release time rather than deployment time, thereby decoupling applications from infrastructure.

- Observability not only surfaces OS-level information and metrics, but also application health and other signals.

- Cloud and OS distribution portability: Runs on Ubuntu, RHEL, CoreOS, on-prem, Google Kubernetes Engine, and anywhere else.

- Application-centric management: Raises the level of abstraction from running an OS on virtual hardware to running an application on an OS using logical resources.

- Loosely coupled, distributed, elastic, liberated micro-services: applications are broken into smaller, independent pieces and can be deployed and managed dynamically – not a monolithic stack running on one big single-purpose machine.

- Resource isolation: predictable application performance.

- Resource utilization: high efficiency and density

Containers are a good way to bundle and run your applications. In a production environment, you need to manage the containers that run the applications and ensure that there is no downtime. This is way many companies are now using Kubernetes for the scaling and docker deployment. Kubernetes provides you with a framework to run distributed systems resiliently. It takes care of scaling and failover for your application, and provides instant deployment and easy resource management.

InAccel FPGA deployment on distributed systems

The need of Powerful Accelerators

However as typical processors can no longer keep increasing their performance as in the past due to the end of Moore’s law and Dennard’s law, most efficient computing elements have to be utilized. Especially in application like Machine learning where the computing power that is required keeps increasing every 4-5 months, high-performance computing platforms are required.

According to John Hennessy and David Patterson, the only path left for higher performance computing systems is domain-specific accelerators: Digital circuits that have been developed for specific tasks providing 10x-200x speedup compared to CPUs and consume much lower energy.

FPGAs (or Adaptive Compute Acceleration Platform – ACAPs) are programmable domain specific accelerators that can offer both the performance of tailored-made architectures and high flexibility. FPGAs can be configured for specific tasks offering higher performance than CPUs and are programmable meaning that they can be programmed with different kind of accelerators (IP blocks) depending on the application requirements.

FPGAs offer significant advantages compared with other computing platform like increase performance, lower latency and better energy efficiency. However, the widespread adoption by the software community requires the availability of frameworks that allow easy deployment. The required frameworks are necessary that will allow software developers to deploy FPGAs in the same way as any computing platform.

Cloud providers offer only FPGAs as infrastructure making hard for the software community to easily deploy their applications. For example, in case that the application needs to be deployed in 8 FPGAs, software developers will have to manually distribute the workload to the available FPGAs. Similarly, if multiple users or applications wants to have access to a cluster of FPGAs, software developers will have to develop their own solution to prevent the conflict of the resources or the serialization of the access to the FPGA resources.

FPGA deployment and Scaling on distributed systems

Having in mind these issues, InAccel developed world-first FPGA orchestrator that allows easy deployment, instant scaling and seamless resource management of the FPGA clusters. InAccel’s FPGA orchestrator allows the deployment of FPGAs as a Platform ready to be used by the software developers just like any other computing system.

InAccel FPGA orchestrator abstracts away the available FPGA resources serving as an OS layer and “kubernetes-alike” layer for the applications that need to be deployed on FPGAs. The main advantages that InAccel’s orchestrator offers are:

- Easy deployment: Software developers can invoke the functions that want to accelerate in the same way that it is done on any other software framework. Software developers do no need to worry about the buffer management, the configuration file of the FPGAs, etc. InAccel’s unique orchestrator overloads the specific functions that need to be offloaded to the FPGAs. Users can use accelerators in the same way as it would have been done using software libraries. Software developers can deploy their applications using simple C/C++, Python and Java API calls and not OpenCL.

- Seamless resource management: Multiple applications or users or processes can share the available resources without the user having to do manually the contention/conflict management. InAccel’s orchestrator serves as the scheduler that allows the sharing of the available FPGA resources in an FPGA cluster from multiple applications, multiple processes or multiple users-tenants.

- Instant Scaling: Users just invoke as many times as they need the function that they want to accelerate without having to manually distributed the functions to the available resources. Whether there are many kernels on a single FPGA or multiple FPGAs on a single server, InAccel’s Coral Orchestrator performance the load-balancing and the distribution of the functions that need to be accelerated to the available resources. InAccel also provides the Kubernetes plugin in which the available resources are advertised to Kubernetes to allow application to scale-out to multiple Server (with multiple FPGAs per server.)

- Heterogeneous deployment: InAccel unique bitstream repository allows the deployment of fully heterogeneous FPGA clusters. InAccel bitstream repository holds different bitstreams for the available FPGAs. The bitstream repository is integrated with the Coral Orchestrator alleviating the user from the complexity of FPGA-specific bitstreams. Users can deploy their application to heterogenous FPGA clusters (e.g. different FPGA cards from the same or different vendors) and InAccel FPGA orchestrator takes care for loading the right bitstream.

InAccel’s unique approach of an FPGA orchestrator as a middleware, allows the easy deployment of FPGAs by software developers. An FPGA orchestrator is used to schedule the function callings to the available FPGA resources. That methodology allows easy scale-out of an application to multiple FPGAs in the same server without the user having to manually define which function will be executed on which FPGA. As it is done in the software world where the users do not specify in which core the application will be executed, similarly InAccel manager/orchestrator automatically dispatch the functions to the available resources in the FPGA cluster.

Also, it automatically configures and initialize the FPGAs with the right bitstream making easier than ever the deployment of FPGA clusters from a software developer. Actually, it serves like a Kubernetes for multiple FPGAs n the same servers. Combining the FPGA manager with InAccel’s Kubernetes plug-in it allows to scale-out also to multiple servers with multiple FPGAs making it ready for enterprise-grade deployment.

FPGA offer a unique combination of advantages: high-throughput, low latency and energy efficiency. Using OpenCL and C/C++ is now easier than ever to create your own accelerator. However, in order to allow the widespread adoption of FPGAs, we must offer the frameworks that will allow easy deployment in the same way that is done in computing resources like CPUs and GPUs. InAccel’s unique FPGA orchestrator provides the abstraction layer that servers as an OS for an FPGA cluster and allows easy deployment, instant scaling and seamless resource management for easier integration with the software stack.