Current enterprise workloads are often run on docker containers on top of kubernetes clusters allowing automating deployment, scaling, and management of containerized applications. Dynamic resource allocation of the hardware resources provides high resource utilization, high availability, and fault-tolerant application deployment.

FPGAs is an emerging accelerator platform that can provide several advantages for enterprise workloads such as high throughput, low latency and reduced energy consumption for several applications like machine learning, genomics, quantitative finance and DNN.

Today, most of the enterprises are still reluctant to the widely adoption of the FPGAs mainly due to the lack of an distributed system for FPGAs and lack of widely-used frameworks like kubernetes that fully support FPGAs. Enterprise customers expect the simplicity and resource availability of the virtualized data center using CPUs and GPUs.

As some enterprises adopt FPGAs to speedup their applications they find that managing infrastructure at scale, simplified maintenance, and visibility, all hallmarks of virtualized infrastructure, are not necessarily available in the FPGA world.

Virtualization of accelerated workloads needs to support the unique nature of enterprise workloads, and still be easy for IT to manage and maintain. In the domain of GPU, there are several efforts towards the virtualization of the GPU resources. Bitfussion (acquired by VMware) provided a framework for the virtualization of GPUs. Run.ai also provide a framework that allow the virtualization of the AI resources (e.g. GPUs).

Unfortunately, in the FPGA world there is a lack of frameworks that allow the easy deployment, scaling and dynamic resource management of the FPGA resources in the same way that it is done for CPUs and GPUs.

For example, static allocation of resources leads to limitations on experiment size and speed, low utilization, and lack of IT controls. Lack of automatic deployment and scaling leads to time-consuming manual deployment and scaling.

Also, currently it is very hard to allow multiple users to deploy their FPGA applications in a cluster of FPGAs. A static allocation mechanism (usually manually) is required that will allocate the available FPGAs to the users.

Similarly, there is a lack of scalability. Currently, if a software developer needs to scale the application to multiple FPGAs, this needs to be done manually. The users need to specify which tasks will be executed to which FPGA which is time consuming and error-prone.

The only way to allow benefit from the power of the FPGAs is by providing a framework that allows dynamic resource management, easy deployment and instant scaling.

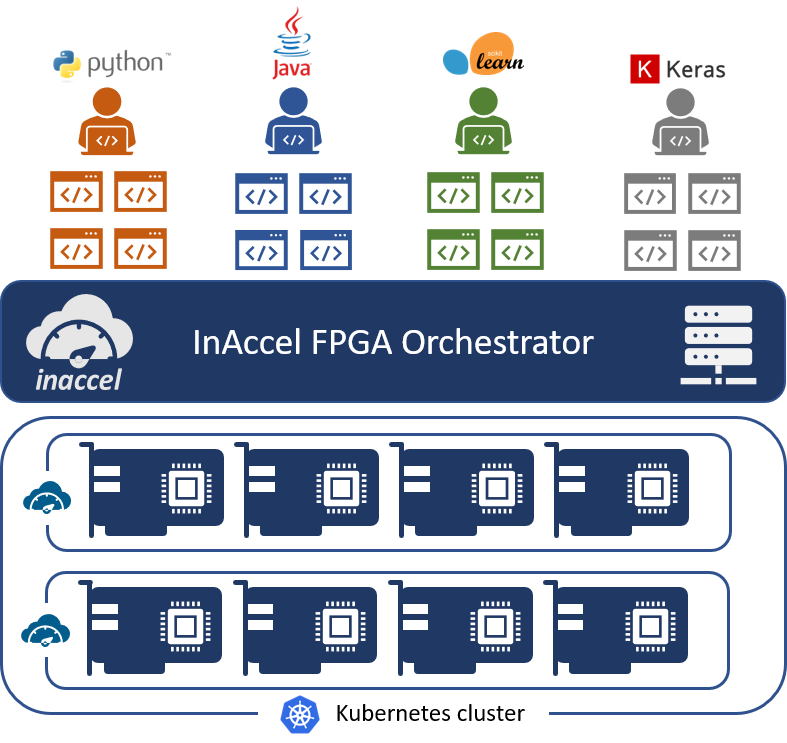

Having in mind these issues, InAccel developed a unique FPGA orchestrator that allows easy deployment, instant scaling and dynamic resource management of the FPGA clusters. InAccel’s FPGA orchestrator allows the deployment of FPGAs as a Platform ready to be used by the software developers just like any other computing system.

InAccel FPGA orchestrator abstracts away the available FPGA resources serving as an OS layer and “kubernetes-alike” layer for the applications that need to be deployed on FPGAs. The main advantages that InAccel’s orchestrator offers are:

- Easy deployment: Software developers can invoke the functions that want to accelerate in the same way that it is done on any other software framework. Software developers do no need to worry about the buffer management, the configuration file of the FPGAs, etc. InAccel’s unique orchestrator overloads the specific functions that need to be offloaded to the FPGAs. Users can use accelerators in the same way as it would have been done using software libraries. Software developers can deploy their applications using simple C/C++, Python and Java API calls and not OpenCL.

- Dynamic resource management: Multiple applications or users or processes can share the available resources without the user having to do manually the contention/conflict management. InAccel’s orchestrator serves as the scheduler that allows the sharing of the available FPGA resources in an FPGA cluster from multiple applications, multiple processes or multiple users-tenants.

- Instant Scaling: Users just invoke as many times as they need the function that they want to accelerate without having to manually distributed the functions to the available resources. Whether there are many kernels on a single FPGA or multiple FPGAs on a single server, InAccel’s Coral Orchestrator performance the load-balancing and the distribution of the functions that need to be accelerated to the available resources. InAccel also provides the Kubernetes plugin in which the available resources are advertised to Kubernetes to allow application to scale-out to multiple Server (with multiple FPGAs per server.)

- Heterogeneous deployment: InAccel unique bitstream repository allows the deployment of fully heterogeneous FPGA clusters. InAccel bitstream repository holds different bitstreams for the available FPGAs. The bitstream repository is integrated with the Coral Orchestrator alleviating the user from the complexity of FPGA-specific bitstreams. Users can deploy their application to heterogenous FPGA clusters (e.g. different FPGA cards from the same or different vendors) and InAccel FPGA orchestrator takes care for loading the right bitstream.

The main benefit of this approach is that it allows seamlessly integration of FPGAs to widely used framework like the followings (click to learn more for each for these frameworks):

- Apache Spark

- Scikit-learn

- Kubernetes

- Kubesphere

- Kubeflow

- Jupyter

- BinderHub

- Keras

- H2O

- Java

- Python

- SQL Server 2019

FPGA offer a unique combination of advantages: high-throughput, low latency and energy efficiency. Using OpenCL and C/C++ is now easier than ever to create your own accelerator. However, in order to allow the widespread adoption of FPGAs, we must offer the frameworks that will allow easy deployment in the same way that is done in computing resources like CPUs and GPUs. InAccel’s unique FPGA orchestrator provides the abstraction layer that servers as an OS for an FPGA cluster and allows easy deployment, instant scaling and seamless resource management for easier integration with the software stack.

Start accelerating instantly your application with InAccel FPGA orchestrator.